Sorry for my rude title Scott :-) but I was just on the phone with me ex Capgemini colleague Ernst Cozijnsen and we had a long discussion about super fast storage for replicas in a View environment. In the end we settled for RAM. I immediately jumped into my lab to setup a proof of concept to see how it performs and it’s pure awesomeness, faster than fast and quicker than quick. You want to see what we discussed? Well here’s the story. In the proof of concept I’m using a Windows 2008 64 bit Enterprise server with 7 GB of RAM and the StarWind iSCSI target software. I’ve configured this iSCSI target with a RAM disk of 6 GB and created a VMFS on the iSCSI target. I’ve cloned a Windows 2003 virtual machine to the newly created VMFS hosted in RAM and did some performance checks. I was astounded.

Sorry for my rude title Scott :-) but I was just on the phone with me ex Capgemini colleague Ernst Cozijnsen and we had a long discussion about super fast storage for replicas in a View environment. In the end we settled for RAM. I immediately jumped into my lab to setup a proof of concept to see how it performs and it’s pure awesomeness, faster than fast and quicker than quick. You want to see what we discussed? Well here’s the story. In the proof of concept I’m using a Windows 2008 64 bit Enterprise server with 7 GB of RAM and the StarWind iSCSI target software. I’ve configured this iSCSI target with a RAM disk of 6 GB and created a VMFS on the iSCSI target. I’ve cloned a Windows 2003 virtual machine to the newly created VMFS hosted in RAM and did some performance checks. I was astounded.

Wednesday, December 15. 2010

Hey Drummonds forget SSD – RAM is the future

Friday, December 10. 2010

Technical Papers -VMware High Availability (HA): Deployment Best Practices

This paper describes best practices and guidance for properly deploying VMware HA in VMware vSphere 4.1. These include discussions on proper network and storage design, and recommendations on settings for host isolation response and admission control.

Saturday, December 4. 2010

VMware Product Demo - Memory Compression

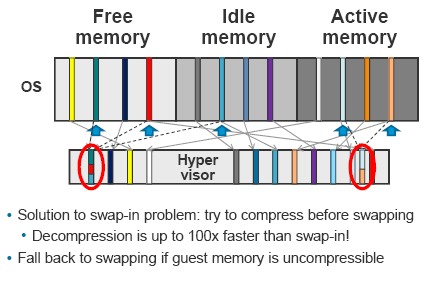

Finally, with Transparent Memory Compression, 4.1 will compress memory on the fly to increase the amount of memory that appears to be available to a given VM. The new Transparent Memory Compression is of interest in the workload cases where memory -- rather than CPU cycles -- has limitations. ESX/ESXi provides a Memory Compression cache to improve virtual machine performance when using memory over-commitment. Memory Compression is enabled by default when a host's memory becomes overcommitted; ESX/ESXi compresses virtual pages and stores them in memory.

Since accessing compressed memory is faster than accessing memory swapped to disk, Memory Compression in ESX/ESXi allows memory over-commits without significantly hindering performance. When a virtual page needs to be swapped, ESX/ESXi first attempts to compress the page. Pages that can be compressed to 2 KB or smaller are stored in the virtual machine's compression cache, increasing the capacity of the host. The maximum size can be set for the Compression Cache and disable Memory Compression using the Advanced Settings dialog box in the vSphere Client.

Whitepaper - Maximizing Virtual Machine Performance

VM performance is ultimately determined by the underlying physical hardware that serves as the foundation for your virtual infrastructure. The construction of this foundation has become simpler over the years, but there are still several areas that should be fine-tuned in order to maximize the VM performance in your environment. While some of the content of this writing will be generic toward any hypervisor, this document focuses on VMware ESX(i) 4.1. This is an introduction to performance tuning and not intended to cover everything in detail. Most topics have links to sites that contains deep-dive information if you wish to learn more.

Thursday, December 2. 2010

vStorage API's for Array Integration (VAAI) product demo

The vStorage API for Array Integration (VAAI) is a new API for storage partners to leverage as a means to speed up certain functions that, when delegated to the storage array, can greatly enhance performance. This API is currently supported by several storage partners and requires these partners to release a special version of their firmware to work with this API. In the vSphere 4.1 release, this array offload capability supports three primitives:

- Full copy enables the storage arrays to make full copies of data within the array without having the ESX server read and write the data.

- Block zeroing enables storage arrays to zero out a large number of blocks to speed up provisioning of virtual machines.

- Hardware-assisted locking provides an alternative means to protect the metadata

http://www.vmware.com/files/pdf/techpaper/VMW-Whats-New-vSphere41-Storage.pdf

Storage I/O Control product demo

With the release of vSphere 4.1, storage IO Control allows cluster-wide storage IO prioritization. This allows better workload consolidation and helps reduce extra costs associated with over-provisioning. Storage IO Control extends the constructs of shares and limits to handle storage IO resources. The amount of storage IO that is allocated to virtual machines during periods of IO congestion can be controlled, which ensures that more important virtual machines get preference over less important virtual machines for IO resource allocation.

When Storage IO Control on a datastore is enabled, ESX/ESXi begins to monitor the device latency that hosts observe when communicating with that datastore. When device latency exceeds a threshold, the datastore is considered to be congested and each virtual machine that accesses that datastore is allocated IO resources in proportion to their shares and is set per virtual machine. The number can be adjusted for each based on need. Low priority VMs can limit IO bandwidth for high priority VMs and storage allocation should be in line with VM priorities.

This feature enables pre-datastore priorities/shares for VM to improve total throughput and has Cluster level enforcement for shares for all workload accessing a datastore. Configuring Storage I/O Control is a two-step process:

1. Enable Storage I/O Control for the datastore.

2. Set the number of storage I/O shares and upper limit of I/O operations per second (IOPS) allowed for each virtual machine. By default, all virtual machine shares are set to Normal (1000) with unlimited IOPS.

Duncan Epping : Storage IO Control, the movie

Scott Drummonds : Storage IO Control

VirtualMiscellaneous : Storage IO Control - SIOC

Thursday, November 25. 2010

Transitioning to ESXi with vSphere 4.1

While I was prepping my upcoming “vSphere Advanced Troubleshooting” presentation for the Dutch VMUG event 2010, I stumbled upon a great presentation by Mark Monce. It’s called “Transitioning to ESXi with vSphere 4.1” and contains a lot of information like:

Overview of ESXi

Overview of ESXi

- Hardware Monitoring and Systems Management

- Infrastructure Services

- Command Line Interfaces

- Diagnostics and Troubleshooting

One thing I didn’t know was, that you can use your web browser to retrieve some essential ESXi diagnostic information.

Browser-based Access of Config Files

https://<hostname>/host

Browser-based Access of Log Files

https://<hostname>/host/messages

Browser-based Access of DatastoreFiles

https://<hostname>/folder

Saturday, November 20. 2010

How to configure advanced VMXNET3 settings

Receive packets might be dropped at the virtual switch if the virtual machine’s network driver runs out of receive (Rx) buffers, that is, a buffer overflow. The dropped packets may be reduced by increasing the Rx buffers for the virtual network driver.

In ESX 4.1, you can configure the following parameters from the Device Manager (a Control Panel dialog box) in Windows guest operating systems: Rx Ring #1 Size, Rx Ring #2 Size, Tx Ring Size, Small Rx Buffers, and Large Rx Buffers.

The default value of the size of the first Rx ring, Rx Ring #1 Size, is 512. You can modify the number of Rx buffers separately using the Small Rx Buffers parameter. The default value is 1024.

For some processes (for example, traffic that arrives in burst), you might need to increase the size of the ring, while for others (for example, applications that are slow in processing receive traffic) you might increase the number of the receive buffers.

When jumbo frames are enabled, you might use a second ring, Rx Ring #2 Size. The default value of RX Ring #2 Size is 32. The number of large buffers that are used in both RX Ring #1 and #2 Sizes when jumbo frames are enabled is controlled by Large Rx Buffers. The default value of Large Rx Buffers is 768.

Friday, November 12. 2010

Understanding and Using vStorage APIs for Array Integration and NetApp Storage

This document describes the technical aspects of NetApp support and integration with VMware® vStorage APIs for Array Integration (VAAI), as well as how to deploy and use this technology. VAAI is a set of application programming interfaces (APIs) and SCSI commands that offload certain I/O-intensive tasks to NetApp® storage systems. By integrating with vStorage APIs, NetApp enables advanced storage capabilities to be accessed and executed from familiar VMware interfaces, improving manageability, performance, data mobility, and data protection.

Wednesday, November 3. 2010

Performance Best Practices for VMware vSphere 4.1

VMware has released another great “technical paper” regarding Performance Best Practices for VMware vSphere 4.1. It can be found in the Technical Resource Center which by the way contains a lot of awesome docs.

The technical paper, Performance Best Practices for VMware vSphere 4.1, provides performance tips that cover the most performance-critical areas of VMware vSphere 4.1. It is not intended as a comprehensive guide for planning and configuring your deployments.

Chapter 1 - “Hardware for Use with VMware vSphere,” provides guidance on selecting hardware for use with vSphere.

Chapter 2 - “ESX and Virtual Machines,” provides guidance regarding VMware ESX™ software and the virtual machines that run in it.

Chapter 3 - “Guest Operating Systems,” provides guidance regarding the guest operating systems running in vSphere virtual machines.

Chapter 4 - “Virtual Infrastructure Management,” provides guidance regarding resource management best practices.

http://www.vmware.com/pdf/Perf_Best_Practices_vSphere4.1.pdf