vSphere supports several command‐line interfaces for managing your virtual infrastructure including the vSphere Command‐Line Interface (vCLI), a set of ESXi Shell commands, and PowerCLI. You can choose the CLI set best suited for your needs, and write scripts to automate your CLI tasks.

The vCLI command set includes vicfg- commands and ESXCLI commands. The ESXCLI commands included in the vCLI package are equivalent to the ESXCLI commands available on the ESXi Shell. The vicfgcommand set is similar to the deprecated esxcfg- command set in the ESXi Shell.

You can manage many aspects of an ESXi host with the ESXCLI command set. You can run ESXCLI commands as vCLI commands or run them in the ESXi Shell in troubleshooting situations. You can also run ESXCLI commands from the PowerCLI shell by using the Get-EsxCli cmdlet. See the vSphere PowerCLI Administration Guide and the vSphere PowerCLI Reference. The set of ESXCLI commands available on a host depends on the host configuration. The vSphere Command‐Line Interface Reference lists help information for all ESXCLI commands. Run esxcli --server <MyESXi> --help before you run a command on a host to verify that the command is defined on the host you are targeting.

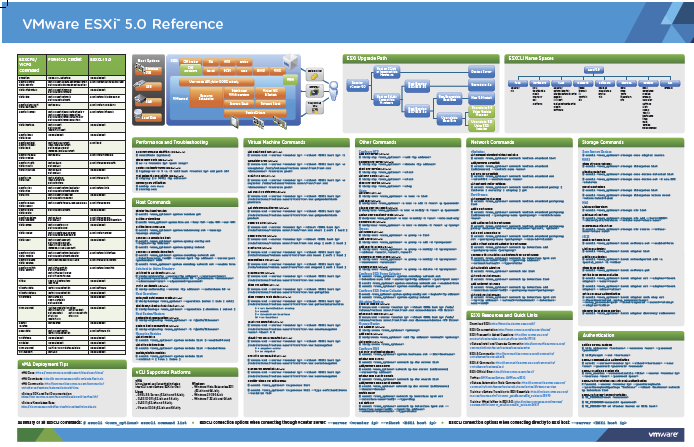

You can use this link to get your copy of the VMware ESXi 5.0 Reference Poster.

Friday, September 30. 2011

VMware ESXCLI 5.0 Reference Poster

Tuesday, September 27. 2011

Video - Metro vMotion in vSphere 5.0

vSphere 5 introduces a new latency-aware Metro vMotion feature that not only provides better performance over long latency networks but also increases the round-trip latency limit for vMotion networks from 5 milliseconds to 10 milliseconds. Previously, vMotion was supported only on networks with round-trip latencies of up to 5 milliseconds. In vSphere 4.1, vMotion is supported only when the latency between the source and destination ESXi/ESX hosts is less than 5 ms RTT (round-trip time). For higher latencies, not all workloads are guaranteed to converge. With Metro vMotion in vSphere 5.0, vMotion can be used to move a running virtual machine when the source and destination ESX hosts have a latency of more than 5ms RTT. The maximum supported round trip time latency between the two hosts is now 10ms. Metro vMotion is only available with vSphere Enterprise Plus license.

Related Video – Carter Shanklin’s WANatronic 10001

Monday, September 26. 2011

Forbes Guthrie has released the VCP5 documentation notes

![]() Fellow vExpert Forbes Guthrie has released his vSphere 5.0 documentation notes.

Fellow vExpert Forbes Guthrie has released his vSphere 5.0 documentation notes.

Here are my condensed notes for the vSphere 5.0 documentation. They’re excerpts taken directly from VMware’s own official PDFs. The notes aren’t meant to be comprehensive, or for a beginner; just my own personal notes. I made them whilst studying for the VCP5 beta exam, and its part of the process I use to collate information for the vSphere Reference Card.

Thursday, September 22. 2011

Technical Paper - VMware vShield App Protecting Virtual SAP Deployments

This paper describes some use cases of deploying SAP with vShield App. vShield App provides microsegmentation / zoning of different landscapes which enables a secure SAP deployment. The configurations covered here are examples only, but provide a starting point from which to plan for a security architecture to cover a SAP installation on VMware in production and non-production environments.

Workload characterizations conducted against SAP show that CPU resources are required by vShield firewall virtual machines, the extent of which is dependent on the network traffic generated by the application. When there is a need for additional firewall capacity, administrators can add CPU or memory resources to the vShield App appliance. If the cluster is resource limited, administrators can add another host to the cluster along with the vShield App appliance and the hypervisor module.

Customer workloads will differ from those tested here which will result in different utilizations of the vShield App firewall appliance. Situations where systems are designed as two-tier instead of three-tier would reduce network traffic between virtual machines and lower firewall appliance utilization. For example, some SAP customers may deploy database and application instances in a single large virtual machine.

Categorizing applications into a container such as vApp greatly simplifies management of firewall policies with vShield App. Application and security administrators can respond rapidly to specific demands in a dynamic landscape, and while virtual machine templates enable quick deployment of systems, vShield App facilitates speedy security compliance.

Wednesday, September 21. 2011

Technical Paper - Creating a vSphere Update Manager Depot

- A vendor-index.xml file. The index lists the product ID and version number, and points to metadata.zip files.

- One or more metadata.zip files. The metadata.zip files point to the location of the VIB files. Names of metadata.zip files cannot conflict with other filenames within your set of files or with the files of other vendors.

- One or more bulletins describing the VIB file. A bulletin is an XML file that describes the VIB included in a consumable package, metadata.zip. At least one bulletin must be included in every metadata.zip file.

- One or more VIB files.

From the vendor depot, Update Manager downloads a list of supported vendor Web site URLs. Based on the list of URLs, Update Manager obtains a list of metadata URLs from the publishing Web site, where it downloads the metadata. The metadata, in turn, contains the URLs for the VIB files. You can create the files required for an update depot by using VIB tools that are part of VMware Workbench. To create an online depot, unzip the contents of an offline bundle created by Workbench to the Web server root directory.

http://www.vmware.com/files/pdf/techpaper/vsphere-50-update-manager-depot.pdf

Saturday, September 17. 2011

Video - Running vMotion on multiple–network adaptors

I’ve created a real cool video which shows you how to configure your vSphere 5 network in order to use multiple network adapters for vMotion. The idea for this video came from fellow VCI and virtualization friend Frank Brix Pedersen. More information about vMotion Architecture, Performance, and Best Practices in VMware vSphere 5 can be found here.

When using the multiple–network adaptor feature, configure all the vMotion vmnics under one vSwitch and create one vMotion vmknic for each vmnic. In the vmknic properties, configure each vmknic to leverage a different vmnic as its active vmnic, with the rest marked as standby. This way, if any of the vMotion vmnics become disconnected or fail, vMotion will transparently switch over to one of the standby vmnics. When all your vmnics are functional, though, each vmknic will route traffic over its assigned, dedicated vmnic.

Monday, September 12. 2011

vMotion Architecture, Performance, and Best Practices in VMware vSphere 5

VMware’s Sreekanth Setty who works as a staff member at the Performance Engineering team has published a great technical white-paper regarding vMotion Architecture, Performance, and Best Practices in VMware vSphere 5.

VMware vSphere vMotion is one of the most popular features of VMware vSphere. vMotion provides invaluable benefits to administrators of virtualized datacenters. It enables load balancing, helps prevent server downtime, enables troubleshooting and provides flexibility—with no perceivable impact on application availability and responsiveness.

vSphere 5 includes a number of performance enhancements and new features that have been introduced in vMotion. Among these improvements are a multiple–network adaptor capability for vMotion, better utilization of 10GbE bandwidth, Metro vMotion, and optimizations to further reduce impact on application performance.

A series of tests were conducted to quantify the performance gains of vMotion in vSphere 5 over vSphere 4.1 in a number of scenarios including Web servers, messaging servers and database servers. An evacuation scenario was also performed in which a large number of virtual machines were migrated.

Test results show the following:

- Improvements in vSphere 5 over vSphere 4.1 are twofold: the duration of vMotion and the impact on application performance during vMotion.

- There are consistent performance gains in the range of 30% in vMotion duration on vSphere 5, due to the optimizations introduced in vMotion in vSphere 5.

- The newly added multi–network adaptor feature in vSphere 5 results in dramatic improvements in performance (for example, duration time is reduced by more than a 3x factor) in vSphere 5 over vSphere 4.1.

Friday, September 9. 2011

vSphere 5 Product Documentation - PDF and E-book Formats

VMware really did an outstanding job with the availability of vSphere 5 information at their new vSphere 5 Documentation Center. It offers a wide range of documents in searchable HTML format but also offers all guides in PFD, ePub and mobi format. It even has a link to one downloadable zip file with all the vSphere 5 PDFs you need.

Archive of all PDFs in this list [zip]

vSphere Basics [pdf | epub | mobi]

vSphere Installation and Setup [pdf | epub | mobi]

vSphere Upgrade [pdf | epub | mobi]

vSphere vCenter Server and Host Management [pdf | epub | mobi]

vSphere Virtual Machine Administration [pdf | epub| mobi]

vSphere Host Profiles [pdf | epub | mobi]

vSphere Networking [pdf | epub | mobi]

vSphere Storage [pdf | epub | mobi]

vSphere Security [pdf | epub | mobi]

vSphere Resource Management [pdf | epub | mobi]

vSphere Availability [pdf | epub | mobi]

vSphere Monitoring and Performance [pdf | epub| mobi]

vSphere Troubleshooting [pdf | epub | mobi]

vSphere Examples and Scenarios [pdf | epub | mobi]

Thursday, September 8. 2011

Understanding Memory Management in VMware vSphere 5

vSphere 5.0 leverages the advantages of several memory reclamation techniques to allow users to over-commit host memory, With the support of memory overcommitment, vSphere 5.0 can achieve a remarkable VM consolidation ratio while maintaining reasonable performance. This paper presents how ESXi in vSphere 5.0 manages the host memory and how the memory reclamation techniques efficiently reclaim host memory without much impact on VM performance. A share-based memory management mechanism is used to enable both performance isolation and efficient memory utilization. The algorithm relies on a working set estimation technique which measures the idleness of a VM. Ballooning reclaims memory from a VM by implicitly causing the guest OS to invoke its own memory management technique. Transparent page sharing exploits sharing opportunities within and between VMs without any guest OS involvement. Memory compression reduces the amount of host swapped pages by storing the compressed format of the pages in a per-VM memory compression cache. Swap to SSD leverages SSD’s low read latency to alleviate the host swapping penalty. Finally, a high level dynamic memory reallocation policy coordinates all these techniques to efficiently support memory overcommitment.

Tuesday, September 6. 2011

Performance Implications of Storage I/O Control– Enabled NFS Datastores in vSphere 5.0

Application performance can be impacted when servers contend for I/O resources in a shared storage environment. There is a need for isolating the performance of high priority applications from other low priority applications by appropriately prioritizing the access to shared I/O resources. Storage I/O Control (SIOC) provides a dynamic control mechanism for managing I/O resources across virtual machines in a cluster. This feature was introduced in vSphere 4.1 with support for VMs that share a storage area network (SAN). In VMware vSphere 5.0, this feature has been extended to support network attached storage (NAS) datastores using the NFS application protocol (also known as NFS datastores).

Experiments conducted in the VMware performance labs show that:

• SIOC regulates VMs’ access to shared I/O resources based on disk shares assigned to them. During the periods of I/O congestion, VMs are allowed to use only a fraction of the shared I/O resources in proportion to their relative priority as determined by the disk shares.

• SIOC helps in isolating the performance of latency-sensitive applications that issue small sized (≤8KB) I/O requests from the increase in I/O latency due to a larger sized (≥32KB) request issued to the same storage shared by other applications.

• If the VMs do not fully utilize their portion of the allocated I/O resources on a shared storage device, SIOC redistributes the unutilized resources to those VMs that need them in proportion to the VMs’ disk shares. This results in a fair allocation of storage resources without any loss in their utilization.http://www.vmware.com/files/pdf/sioc-nfs-perf-vsphere5.pdf