Tuesday, July 13. 2010

Steve Herrod, VMware's CTO, Introduces VMware vSphere 4.1

vSphere 4.1 Memory Enhancements - Compression

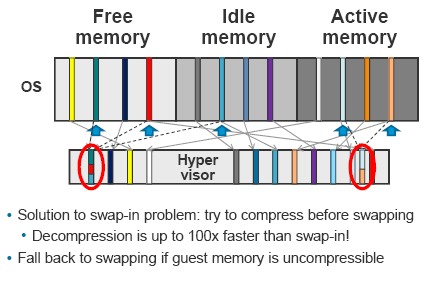

Finally, with Transparent Memory Compression, 4.1 will compress memory on the fly to increase the amount of memory that appears to be available to a given VM. The new Transparent Memory Compression is of interest in the workload cases where memory -- rather than CPU cycles -- has limitations. ESX/ESXi provides a Memory Compression cache to improve virtual machine performance when using memory over-commitment. Memory Compression is enabled by default when a host's memory becomes overcommitted; ESX/ESXi compresses virtual pages and stores them in memory.

Since accessing compressed memory is faster than accessing memory swapped to disk, Memory Compression in ESX/ESXi allows memory over-commits without significantly hindering performance. When a virtual page needs to be swapped, ESX/ESXi first attempts to compress the page. Pages that can be compressed to 2 KB or smaller are stored in the virtual machine's compression cache, increasing the capacity of the host. The maximum size can be set for the Compression Cache and disable Memory Compression using the Advanced Settings dialog box in the vSphere Client.

vSphere 4.1 Network Traffic Management - Emergence of 10 GigE

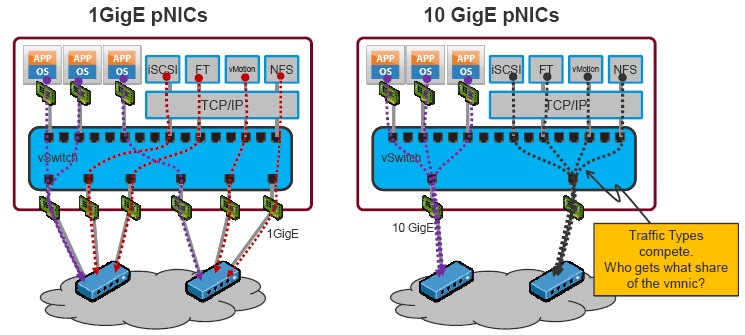

The diagram at left should be familiar to most. When using 1GigE NICs, ESX hosts are typically deployed with NICs dedicated to particular traffic types. For example you may dedicate 4x 1GigE NICs for VM traffic; one NIC to iSCSI, another NIC to vMotion, and another to the service console. Each traffic type gets a dedicated bandwidth by virtue of the physical NIC allocation.

Moving to the diagram at right … ESX hosts deployed with 10GigE NICs are likely to be deployed (for the time being) with only two 10GigE interfaces. Multiple traffic types will be converged over the two interfaces. So long as the load offered to the 10GE interfaces is less than 10GE, everything is ok—the NIC can service the offered load. But what happens when the offered load from the various traffic types exceeds the capacity of the interface? What happens when you offer say 11Gbps to a 10GigE interface? Something has to suffer. This is where Network IO Control steps in. It addresses the issue of oversubscription by allowing you to set the relative importance of predetermined traffic types.

NetIOC is controlled with two parameters—Limits and Shares.

Limits, as the name suggests, sets a limit for that traffic type (e.g VM traffic) across the NIC team. The value is specified in absolute terms in Mbps. When set, that traffic type will not exceed that limit *outbound* (or egress) of the host.

Shares specify the relative importance of that traffic type when those traffic types compete for a particular vmnic (phyiscal NIC). Shares are specified in abstract units numbered between 1 and 100 and indicate the relative importance of that traffic type. For example, if iSCSI has a shares value of 50, and FT logging has a shares value of 100, then FT traffic will get 2x the bandwidth of iSCSI when they compete. If they were both set at 50, or both set at 100, then they would both get the same level of service (bandwidth).

There are a number of preset values for shares ranging from low to high. You can also set custom values. Note that the limits and shares apply to output or egress from the ESX host, not input.

Remember that shares apply to the vmnics; limits apply across a team.

Monday, July 12. 2010

vSphere 4.1 offers improved vMotion speeds

Useful vSphere 4.1 knowledgebase articles

The VMware knowledge base contains some useful articles regarding the new vSphere 4.1 release.

- Using Tech Support Mode in ESXi 4.1

- Troubleshooting ESXi 4.1 Scripted Install errors

- Recreate vSphere 4.0 lockdown mode behavior in vSphere 4.1

- vCenter Server 4.1 fails to install or upgrade with the error: This installation package is not supported by this processor type

- Migrating to the vCenter Server 4.1 database

- Update Manager 4.1 patch repository features

- Lockdown mode configuration after upgrading from ESXi 4.0 to 4.1

- Changes to VMware Support Options in vSphere 4.1

- Securing Credentials in vMA 4.1

- Overview of Active Directory integration in ESX 4.1 and ESXi 4.1

- ALUA parameters in the output of ESX/ESXi 4.1 commands

- USB support for ESX/ESXi 4.1

Sunday, July 11. 2010

vSphere 4.1 Storage Enhancements - Storage IO control

With the release of vSphere 4.1, storage IO Control allows cluster-wide storage IO prioritization. This allows better workload consolidation and helps reduce extra costs associated with over-provisioning. Storage IO Control extends the constructs of shares and limits to handle storage IO resources. The amount of storage IO that is allocated to virtual machines during periods of IO congestion can be controlled, which ensures that more important virtual machines get preference over less important virtual machines for IO resource allocation.

When Storage IO Control on a datastore is enabled, ESX/ESXi begins to monitor the device latency that hosts observe when communicating with that datastore. When device latency exceeds a threshold, the datastore is considered to be congested and each virtual machine that accesses that datastore is allocated IO resources in proportion to their shares and is set per virtual machine. The number can be adjusted for each based on need. Low priority VMs can limit IO bandwidth for high priority VMs and storage allocation should be in line with VM priorities.

This feature enables pre-datastore priorities/shares for VM to improve total throughput and has Cluster level enforcement for shares for all workload accessing a datastore. Configuring Storage I/O Control is a two-step process:

1. Enable Storage I/O Control for the datastore.

2. Set the number of storage I/O shares and upper limit of I/O operations per second (IOPS) allowed for each virtual machine. By default, all virtual machine shares are set to Normal (1000) with unlimited IOPS.

Duncan Epping : Storage IO Control, the movie

Scott Drummonds : Storage IO Control

VirtualMiscellaneous : Storage IO Control - SIOC

Thursday, July 8. 2010

VMware vSphere shared storage comparison guide

- Drobo

- Hewlett-Packard

- Iomega

- Netgear

- QNAP

- Synology

- Thecus

http://searchstorage.techtarget.com.au/articles/42004-VMware-vSphere-shared-storage-guide

Wednesday, July 7. 2010

Two great tips for troubleshooting with Out-of-band management

Every once in a while you will experience problems that only can be fixed by visiting the ESX console, at least I do. When you finally have found the IP address or hostname and you’re using your favourite browser to load the MKS java applet, the information is scrolling fast and your keyboard isn’t mapped correctly. These two problems can be fixed easily, you have to fix the real problem yourself. :-)

First let’s start with the scrolling problem. When you issue a command like esxcfg-vswitch because you want to check out your vSwitch configuration, you only see the last part and can’t scroll back. You might want to redirect the outcome of your command into a file and use nano to walk through it.

esxcfg-vswitch -l >> network.txt

nano network.txt

This type of redirection pipes the output into the file network.txt. The difference between this and the single-'>' redirection is that the old contents (if any) of network.txt are not erased. Instead, the esxcfg -l output is appended to the file.

The second problem I have experienced a lot it an incorrect mapping of the keyboard. This problem can be easily fixed by loading a different keyboard translation table. The program loadkeys reads the file or files specified by filename.... Its main purpose is to load the kernel keymap for the console.

Loadkeys us will do the trick.

Saturday, June 26. 2010

High-performance & Scalable 5-bay All-in-1 NAS Server for vSphere

The Synology DS1010+ runs on the renewned system firmware, Synology DSM 2.3 offering comprehensive applications and features designed specifically for SMBs. Comprehensive network protocol support assures seamless file sharing across Windows, Mac, and Linux platforms. Windows ADS integration allows the Synology DS1010+ to quickly and easily fit in an existing business network environment with no need to recreate users accounts on the Synology DS1010+. The User Home feature minimizes the administrator’s effort in creating a private shared folder for a large amount of users. The sub-folder privilege settings further extend the flexibility to allocate permissions for different workgroups.

Using the NFS protocol or with iSCSI target support, within Synology DSM 2.3, the Synology DS1010+ is a storage asset when serving VMware.

Using the NFS protocol or with iSCSI target support, within Synology DSM 2.3, the Synology DS1010+ is a storage asset when serving VMware.

•CPU Frequency: 1.67GHz, Dual Core

•Floating Point

•Memory Bus: 64bit@DDR800

•Memory: 1GB10

•Internal HDD1: 3.5" SATA(II) X5 or 2.5" SATA/SSD X5

•Max Internal Capacity: 10TB (5x 2TB hard drives)9

•Hot Swappable HDD

•Size (HxWxD): 157mm X 248mm X 233mm

•External HDD Interface: USB 2.0 port X4, eSATA port X1

•Weight: 4.25kg

•LAN: Gigabit X2

•Wireless Support11

"We are pleased that the Synology DS1010+ qualifies for the VMware Ready™ logo, signifying to customers that it has passed specific VMware testing and interoperability criteria and is ready to run their mission-critical business applications and operations," said Bernie Mills, senior director, alliance programs, VMware.

Thursday, June 10. 2010

vCenter Change Insight

Although VMware’s website still has a landing page for VMware vCenter ConfigControl, the rumour goes that this product will be rebranded into vCenter Change Insight. This soon to be released new product is able to track, analyze, assess and take corrective actions to maintain configuration integrity. It’s also able to setup policies to invoke workflows on a selected set of entities, upon a schedule or an event.

The preliminary feature list looks very promising

- Auto-documents environment, all entities, relationships, dependencies

- Tracks and alerts on configuration and relationship changes real time

- Assesses configurations against past, peers and best practices

- Takes corrective actions via policies (e.g. call out to Orchestrator)

Benefits

- Risk mitigation through improved visibility into ripple through effects

- Improve opex through visibility and streamlined remediation

- Integrates with existing products and processes

- Track, analyze, assess & take corrective actions to maintain configuration integrity

With the integration of vCenter Change Insight and Orchestrator you’re able to make custom mass configuration changes and corrections.

You better keep a close watch on the new vSphere Team blog "vThink IT", were David Friedlander and Bogomil Balkansky already have posted their first articles.